Google Cloud Storage tutorial

On this page:

- Overview

- Procedure

- Prerequisites

- Step 1: Register an S3 bucket as a volume

- 1a: Create an IAM (Identity and Access Management) user

- 1b: Authorize this IAM user to access your bucket

- 1c: Register a bucket

- Step 2: Make an object from the bucket available on the Platform

- 2a: Launch an import job

- 2b: Check if the import job has completed

The Volumes API contains two types of calls: one to connect and manage cloud storage, and the other to import and export data to and from a connected cloud account.

Before you can start working with your cloud storage via the Platform you need to authorize the Platform to access and query objects on that cloud storage on your behalf. This is done by creating a "volume".

A volume enables you to treat the cloud repository associated with it as external storage for the Platform. You can 'import' files from the volume to the Platform to use them as inputs for computation. Similarly, you can write files from the Platform to your cloud storage by 'exporting' them to your volume. Learn more about working with volumes.

The BioData Catalyst powered by Seven Bridges uses Amazon Web Services as a cloud infrastructure provider. This affects the cloud storage you can access and associate with your Platform account. For instance, you have full read-write access to your data stored in Amazon Web Services' S3 and read-only access to data stored in Google Cloud Storage.

This short tutorial will guide you through setting up a volume for a GCS bucket. You'll register your GCS bucket as a volume and make an object from the GCS bucket available on the Platform.

Note that as the Platform runs on AWS, you cannot export a file from the Platform to a GCS bucket, as this involves writing to that GCS bucket.

Once a volume is created, you can issue import and export operations to make data appear on the Platform or to move your Platform files to the underlying cloud storage provider.

In this tutorial we assume you want to connect to an Google Cloud Storage bucket. The procedure will be slightly different for other cloud storage providers, such as a Amazon S3 bucket. For more information, please refer to our list of supported cloud storage providers.

To complete this tutorial, you will need:

1.An Google (GCP) account.

2. One or more buckets on this GCP account via Google Cloud Storage (GCS).

3. One or more objects (files) in your target bucket.

4. An authentication token for the Platform. Learn more about getting your authentication token. Note that you cannot write to a GCS bucket from the Platform.

##Step 1: Register an GCS bucket as a volume

To set up a volume, you have to first register a GCS bucket as a volume. Volumes mediate access between the Platform and your buckets, which are local units of storage in GCS.

You can register a GCS bucket as a volume through the following steps below.

###1a: Create an IAM (Identity and Access Management) user

- Log into the Google Cloud Platform console.

- Search for IAM in the top navigation bar. Select IAM & Admin from the drop-down menu.

- Click Service accounts in the left sidebar.

- Click + Create service account below the search bar.

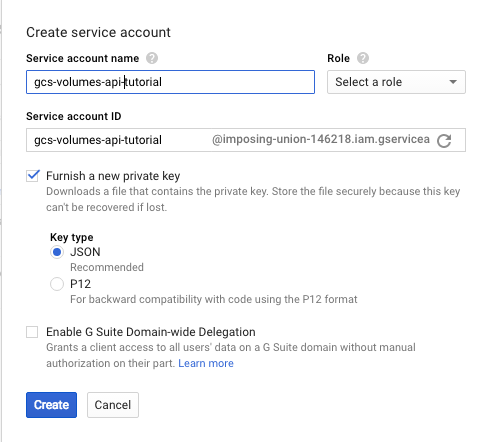

- Fill in account details, as shown below, making sure that:

- Furnish a new private key is checked

- A Key type of JSON is selected

- Enable Google Apps Domain-wide Delegation is unchecked

- Click Create to finish.

Your browser will download a JSON file containing the credentials for this user. Keep this file safe.

###1b: Authorize this IAM user to access your bucket

- Using the dropdown menu next to Google Cloud Platform to navigate to Storage.

- Locate your bucket and click the three vertical dots to the far left of your bucket's name.

- Click Edit bucket permissions.

- Click + Add item.

- From the drop-down menu, select User and enter the service account client's email in the next box. This email is located in the JSON downloaded in the previous section.

- From the drop-down menu, select Reader for Read Only (RO) access or Writer for Read-write (RW) access.

- Click Save.

- Select the three vertical dots to the far left of your bucket's name once more.

- Click Edit object default permissions.

- Click +Add item.

- From the drop-down menu, select User and enter the service account client's email in the next box.

- From the next drop-down menu, select Reader.

- Click Save.

At this point, you can associate the bucket with your Platform account by registering it as a volume.

To register your bucket as a volume, make the API request to Create a volume, as shown in the HTTP request below. Be sure to paste in your authentication token for the X-SBG-Auth-Token key.

This request also requires a request body. Provide a name for your new volume, an optional description, and an object (service) containing the information in the table below. Specify the access_mode as RO for read-only permissions. Be sure to supply a bucket name and substitute in your own credentials.

| Key | Description of value |

|---|---|

typerequired | This must be set to gcs. |

bucketrequired | The name of your GCS bucket. |

prefixdefault: empty string | If provided, the value of this parameter will be used to modify any object key before an operation is performed on the bucket. Even though Google Cloud Platform is not truly a folder-based store and allows for almost arbitrarily named keys, the prefix is treated as a folder name. This means that after applying the prefix to the name of the object the resulting key will be normalized to conform to the standard path-based naming schema for files. For example, if you set the prefix for a volume to a10, and import a file with location set to test.fastq from the volume to the Platform, then the object that will be referred to by the newly-created alias will be a10/test.fast". |

client_email | The client email address for the Google Cloud service account to use for operations on this bucket. This can be found in the JSON containing your service account credentials. |

private_key | The private key for the Google Cloud service account to use for operations on this bucket. This can be found in the JSON containing your service account credentials. |

POST /v2/storage/volumes HTTP/1.1

Host: api.sb.biodatacatalyst.nhlbi.nih.gov

X-SBG-Auth-Token: 3259c50e1ac5426ea8f1273259740f74

content-type: application/json

{

"name": "tutorial_volume",

"description": "New google volume",

"service": {

"type": "gcs",

"bucket": "test-bucket",

"prefix": "",

"credentials": {

"client_email": "[email protected]",

"private_key": "-----BEGIN RSA PRIVATE KEY-----\nrand0mIBAAKCAQEAsXj4E7swo97szcOrAcraSbsGnNuTU1b/4llyspDa0lltZIKL\nfl5s3QoqbjUWqAZXkJexei85g49ULD8BGKH2r4EF+XyKcpoon4uIFcbmYcmsUXM\nJ3ujgyL5DbWnQZ6GrqgFNRFVVz/PuvTZOd6KFCrjbbtCxfKoXQrmCwFC/4NlFR3v\n1kavU81w201Mied3e+pxjfiQKAJOoy5I7kfuH20xfzHXWR2YHdQGbzOUZyPgmzZ6\nH6Ry39b7bgLVbyk3++e13KrsTEf58rRzUHLzlcUDcGyf8iTO2vA2qzcbrbovwqJr\n7H4ZfFllDMYQ/ISj4cmi+sz/hR43LUK86emrXwIDAQABAoIBADBr2fvAMbINsZm+\njjTh/ObrAWXgvvSZIx3F2/Z+cUW9Ioyu1ZJ3/uncMTF6iKD1ggSwbqVQIq7zKaWP\ndGNZ4sk62PEQSx8924iiNsGaIqyj5FmvuoD3SeiorR0hd+3+a67RpwIQpaE1ht7y\nmSYh4riX7w9sbU6G44rnQ1azVG1UHvk5ieOD4OPvJopuc6D6ow1oJOnHE0k8v3HY\n1FpLdWCL6nSERqXOI5w+tllG4NMUmTZ2jhaBSEM4PIJVO+24TM3XFCcvhZ7ipPMF\nP5B8hV4hDA4Av1Ei7iuRZlJsH4sRrtHJE3/FZLgqHRRvt/7w4c1xnwirNghtTNMb\nXVoaS/ECgYEA15vL3l22mIoePlcCxIgVCAxhKm6TVQZsAE2EaeVsJKDl0AgCtn/1\nThMIPPGkO8jmjqHGgA+FhjoUQuCCdIuON00mUpmUxZlwI5+uknuK597/zAjd6W8s\n7p9apvBUDfod0hwF9Jfw+aUtZm6EAUNR1Odbb+bpXp1luwfcesHe4QcCgYEA0rg8\nZBBwh2DetU6wWh2JIejBH5SfRUqtEwo5WiEZhrEQLazcpX4w5uvESnT+xd7qx3yC\n/vyzqmy+YwP92Ql0vZApdQoyKGHVntY/o3HYxZD3x+7BKThUs747WjdSo8SwBkSr\nxEzLBgTqqcho6UXvYTTEAg11F5yNYzbvVf4vROkCgYEAh6XtTamIB9Bd1rrHcv5q\nvPWM7DVFXGj96fLbLAS7VRAlhgyEKG2417YBqNYejb6Hz5TYXhll2F0SAkFd0hU7\nFG/lfHJDt04hz0fXfTFc4yTZqnSpqQPZMQfw8LajK2gA+v/Gf2xYn7fcKGW/h0vj\nYB9u16hfirdcGZ+Ih3MR1mECgYEAnq1b1KJIirlYm8FYrVOGe4FxRF2/ngdA05Ck\nZYl9Vl8pZqvAL+MZ4hpyYvs9CzX1KClL38XdaZ2ftKJB2tjzDZYl9Vl8pZqvAL+MZ4hpyYvs9CzX1KClL38XdaZ2ftKJB2tjzDZYl9Vl8pZqvALJlQZYl9Vl8pZqvAL+MZ4hpyYvs9CzX1KClL38XdaZ2ftKJB2tjzDZYl9Vl8pZqvAL+CxZYl9Vl8pZqvAL+MZ4hpyYvs9CzX1KClL38XdaZ2ftKJB2tjzDZYl9Vl8pZqvAL+MjZYl9Vl8pZqvAL+MZ4hpyYvs9CzX1KClL38XdaZ2ftKJB2tjzDZYl9Vl8pZqvALSi0sVSXpA=\n-----END RSA PRIVATE KEY-----"

}

},

"access_mode": "RO"

}

You'll see a response providing the details for your newly created volume, as shown below.

{

"href": "https://api.sb.biodatacatalyst.nhlbi.nih.gov/v2/storage/volumes/rfranklin/tutorial_volume",

"id": "rfranklin/tutorial_volume",

"name": "tutorial_volume",

"access_mode": "RO",

"service": {

"type": "gcs",

"bucket": "rfranklin-test-bucket",

"prefix": "",

"endpoint": "https://www.googleapis.com",

"credentials": {

"client_email": "[email protected]",

"private_key": "-----BEGIN RSA PRIVATE KEY-----\nrand0mIBAAKCAQEAsXj4E7swo97szcOrAcraSbsGnNuTU1b/4llyspDa0lltZIKL\nfl5s3QoqbjUWqAZXkJexei85g49ULD8BGKH2r4EF+XyKcpoon4uIFcbmYcmsUXM\nJ3ujgyL5DbWnQZ6GrqgFNRFVVz/PuvTZOd6KFCrjbbtCxfKoXQrmCwFC/4NlFR3v\n1kavU81w201Mied3e+pxjfiQKAJOoy5I7kfuH20xfzHXWR2YHdQGbzOUZyPgmzZ6\nH6Ry39b7bgLVbyk3++e13KrsTEf58rRzUHLzlcUDcGyf8iTO2vA2qzcbrbovwqJr\n7H4ZfFllDMYQ/ISj4cmi+sz/hR43LUK86emrXwIDAQABAoIBADBr2fvAMbINsZm+\njjTh/ObrAWXgvvSZIx3F2/Z+cUW9Ioyu1ZJ3/uncMTF6iKD1ggSwbqVQIq7zKaWP\ndGNZ4sk62PEQSx8924iiNsGaIqyj5FmvuoD3SeiorR0hd+3+a67RpwIQpaE1ht7y\nmSYh4riX7w9sbU6G44rnQ1azVG1UHvk5ieOD4OPvJopuc6D6ow1oJOnHE0k8v3HY\n1FpLdWCL6nSERqXOI5w+tllG4NMUmTZ2jhaBSEM4PIJVO+24TM3XFCcvhZ7ipPMF\nP5B8hV4hDA4Av1Ei7iuRZlJsH4sRrtHJE3/FZLgqHRRvt/7w4c1xnwirNghtTNMb\nXVoaS/ECgYEA15vL3l22mIoePlcCxIgVCAxhKm6TVQZsAE2EaeVsJKDl0AgCtn/1\nThMIPPGkO8jmjqHGgA+FhjoUQuCCdIuON00mUpmUxZlwI5+uknuK597/zAjd6W8s\n7p9apvBUDfod0hwF9Jfw+aUtZm6EAUNR1Odbb+bpXp1luwfcesHe4QcCgYEA0rg8\nZBBwh2DetU6wWh2JIejBH5SfRUqtEwo5WiEZhrEQLazcpX4w5uvESnT+xd7qx3yC\n/vyzqmy+YwP92Ql0vZApdQoyKGHVntY/o3HYxZD3x+7BKThUs747WjdSo8SwBkSr\nxEzLBgTqqcho6UXvYTTEAg11F5yNYzbvVf4vROkCgYEAh6XtTamIB9Bd1rrHcv5q\nvPWM7DVFXGj96fLbLAS7VRAlhgyEKG2417YBqNYejb6Hz5TYXhll2F0SAkFd0hU7\nFG/lfHJDt04hz0fXfTFc4yTZqnSpqQPZMQfw8LajK2gA+v/Gf2xYn7fcKGW/h0vj\nYB9u16hfirdcGZ+Ih3MR1mECgYEAnq1b1KJIirlYm8FYrVOGe4FxRF2/ngdA05Ck\nZYl9Vl8pZqvAL+MZ4hpyYvs9CzX1KClL38XdaZ2ftKJB2tjzDZYl9Vl8pZqvAL+MZ4hpyYvs9CzX1KClL38XdaZ2ftKJB2tjzDZYl9Vl8pZqvALJlQZYl9Vl8pZqvAL+MZ4hpyYvs9CzX1KClL38XdaZ2ftKJB2tjzDZYl9Vl8pZqvAL+CxZYl9Vl8pZqvAL+MZ4hpyYvs9CzX1KClL38XdaZ2ftKJB2tjzDZYl9Vl8pZqvAL+MjZYl9Vl8pZqvAL+MZ4hpyYvs9CzX1KClL38XdaZ2ftKJB2tjzDZYl9Vl8pZqvALSi0sVSXpA=\n-----END RSA PRIVATE KEY-----"

}

},

"created_on": "2016-06-26T16:44:20Z",

"modified_on": "2016-06-26T16:44:20Z",

"active": true

}

##Step 2: Make an object from the bucket available on the Platform

Now that we have a volume, we can make data objects from the bucket associated with the volume available as "aliases" on the Platform. Aliases point to files stored on your cloud storage bucket and can be copied, executed, and organized like normal files on the Platform. We call this operation "importing". Learn more about working with aliases.

To import a data object from your volume as an alias on the Platform, follow the steps below.

To import a file, make the API request to start an import job as shown below. In the body of the request, include the key-value pairs in the table below.

| Key | Description of value |

|---|---|

volume_idrequired | Volume ID from which to import the file. This consists of your username followed by the volume's name, such as rfranklin/tutorial_volume. |

locationrequired | Volume-specific location pointing to the file to import. This location should be recognizable to the underlying cloud service as a valid key or path to the file. Please note that if this volume was configured with a prefix parameter when it was created, the prefix will be prepended to location before attempting to locate the file on the volume. |

destinationrequired | This object should describe the Platform destination for the imported file. |

projectrequired | The project in which to create the alias. This consists of your username followed by your project's short name, such as rfranklin/my-project. |

name | The name of the alias to create. This name should be unique to the project. If the name is already in use in the project, you should use the overwrite query parameter in this call to force any file with that name to be deleted before the alias is created. If name is omitted, the alias name will default to the last segment of the complete location (including the prefix) on the volume. Segments are considered to be separated with forward slashes ('/'). |

overwrite | Specify as true to overwrite the file if the file with the same name already exists in the destination. |

POST /v2/storage/imports HTTP/1.1

Host: api.sb.biodatacatalyst.nhlbi.nih.gov

X-SBG-Auth-Token: 3259c50e1ac5426ea8f1273259740f74

content-type: application/json

{

"source":{

"volume":"rfranklin/tutorial_volume",

"location":"example_human_Illumina.pe_1.fastq"

},

"destination":{

"project":"rfranklin/my-project",

"name":"my_imported_example_human_Illumina.pe_1.fastq"

},

"overwrite": true

}

The returned response details the status of your import, as shown below.

{

"href": "https://api.sb.biodatacatalyst.nhlbi.nih.gov/v2/storage/imports/trand0mgPWZbeXxtADETWtFkrE87JBSd",

"id": "trand0mgPWZbeXxtADETWtFkrE87JBSd",

"state": "PENDING",

"overwrite": true,

"source": {

"volume": "rfranklin/tutorial_volume",

"location": "example_human_Illumina.pe_1.fastq"

},

"destination": {

"project": "rfranklin/my-project",

"name": "my_uploaded_example_human_Illumina.pe_1.fastq"

}

}

Locate the id property in the response and copy this value to your clipboard. This id is an identifier for the import job, and we will need it in the following step.

###2b: Check if the import job has completed

To check if the import job has completed, make the API request to get details of an import job, as shown below. Simply append the import job id obtained in the step above to the path.

GET /v2/storage/imports/trand0mgPWZbeXxtADETWtFkrE87JBSd HTTP/1.1

Host: api.sb.biodatacatalyst.nhlbi.nih.gov

X-SBG-Auth-Token: 3259c50e1ac5426ea8f1273259740f74

content-type: application/json

The returned response details the state of your import. If the state is COMPLETED, your import has successfully finished. If the state is PENDING, wait a few seconds and repeat this step.

You should now have a freshly-created alias in your project. To verify that a file has been imported, visit this project in your browser and look for a file with the same name as the key of the object in your bucket.

Congratulations! You've now registered an GCS bucket as a volume and imported a file from the volume to the Platform. Learn more about connecting your cloud storage from our Knowledge Center.

Updated over 3 years ago